1. What services does trusys offer?

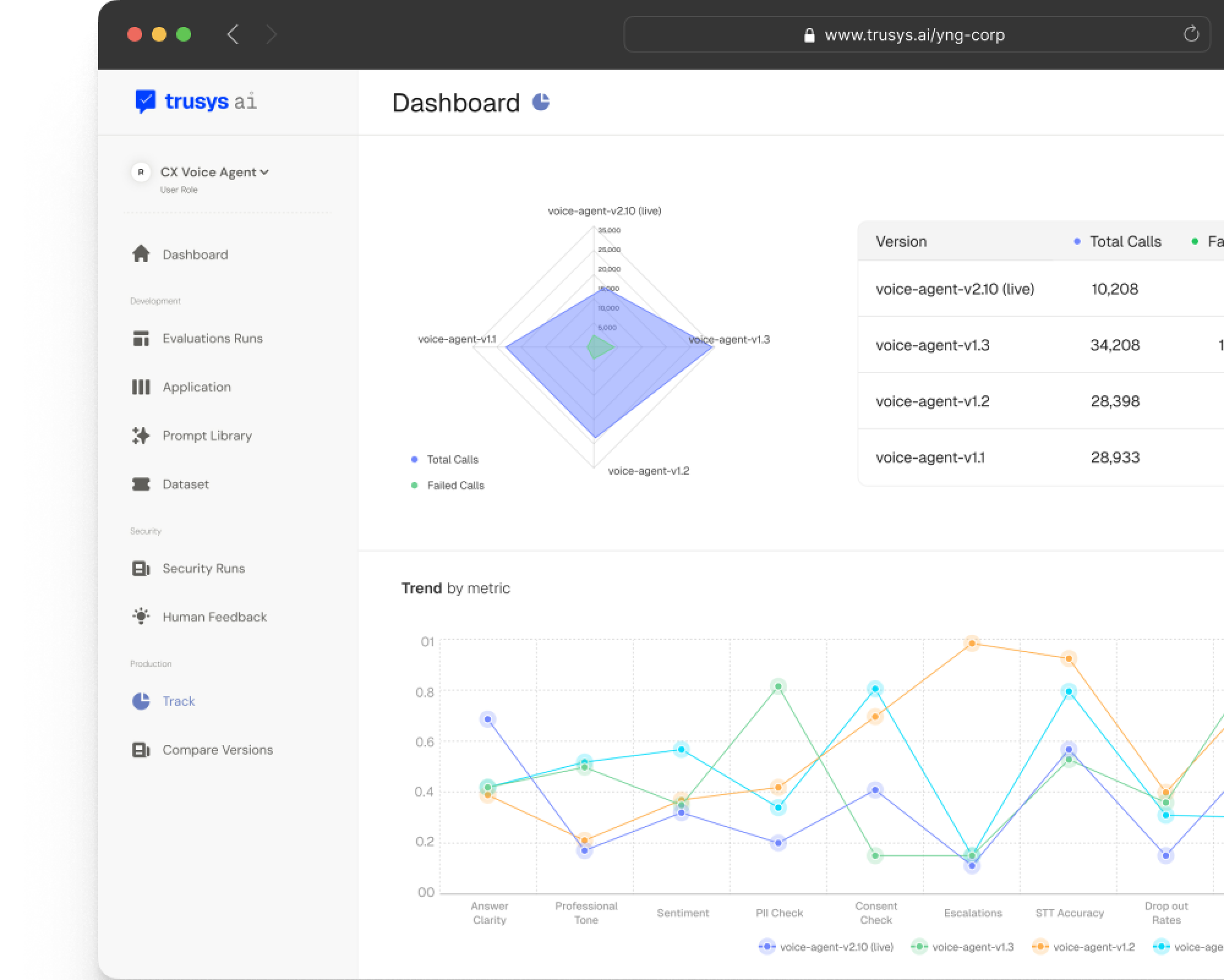

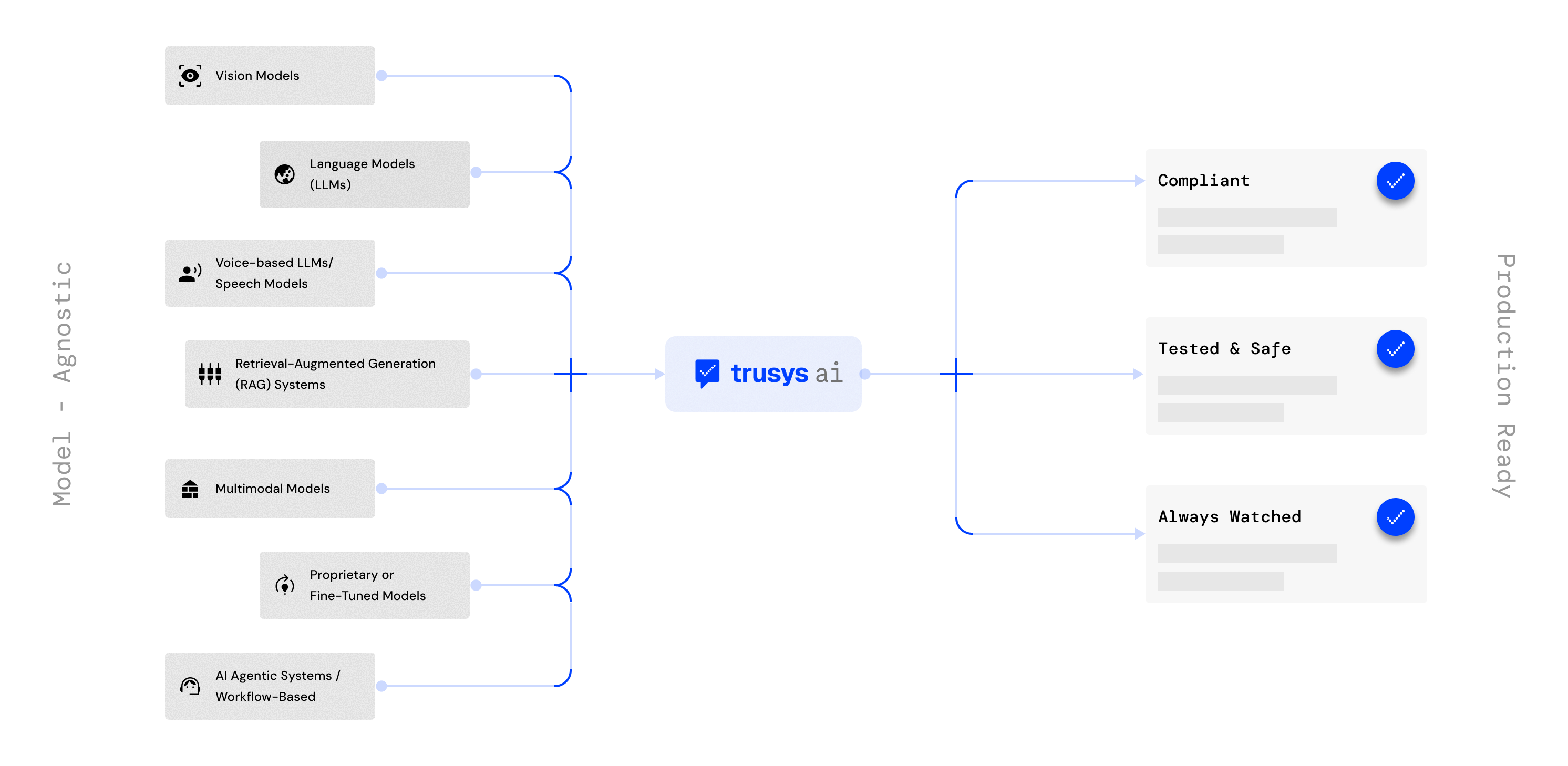

Trusys.ai is a platform to evaluate and monitor large language model (LLM) applications — from early experiments to production. It helps teams analyze outputs, track performance, and close the loop with real-world feedback.

2. How is Trusys different from tools?

While similar in concept, Trusys goes beyond prompt evaluation by supporting voice-based LLM workflows, offers custom scoring metrics, and provides a unified space for multi-stakeholder collaboration across product, QA, and ML teams.

3. Can I use Trusys with any LLM provider?

Yes. Trusys is model-agnostic, meaning you can run evaluations and tracking with OpenAI, Anthropic, Google, open-source models like LLaMA or Mistral, or even your own internal models.

4. Is real-time monitoring of AI in production supported?

Absolutely. You can monitor responses in real time, detect drift or regressions, and analyze how model behavior evolves post-deployment.

5. Who is Trusys for?

Trusys is designed for AI product teams — including engineers, product managers, QA, and researchers — who want visibility, control, and confidence when shipping LLM-powered features.

6. How does Trusys handle data privacy and security?

Trusys is built with enterprise-grade security in mind. Your data is encrypted in transit and at rest. You have full control over what’s logged, stored, or shared, and we offer flexible deployment options — including on-prem and private cloud — to meet your compliance needs.