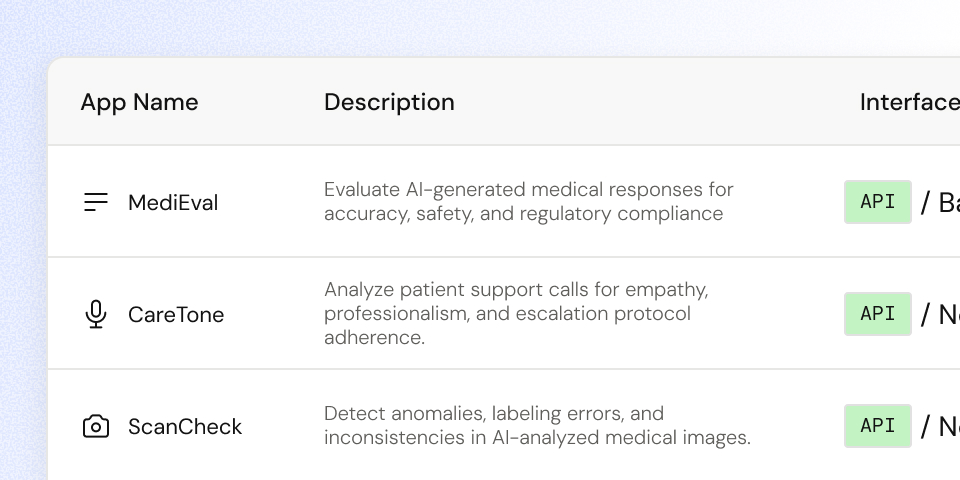

Detect hallucinations and ensure factual accuracy in AI outputs

Identify demographic or systemic biases across use cases

Compare models across tasks with standardized metrics

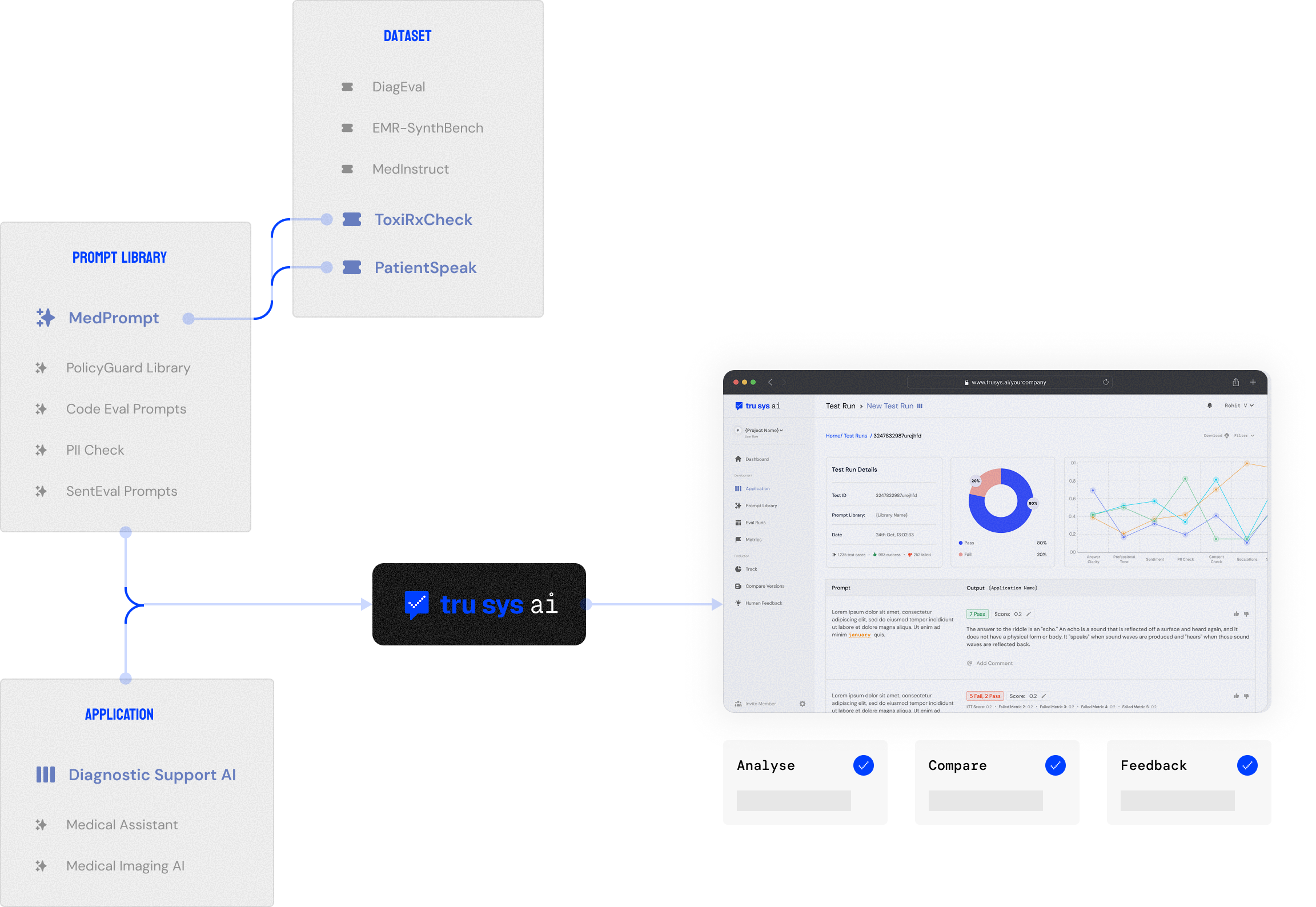

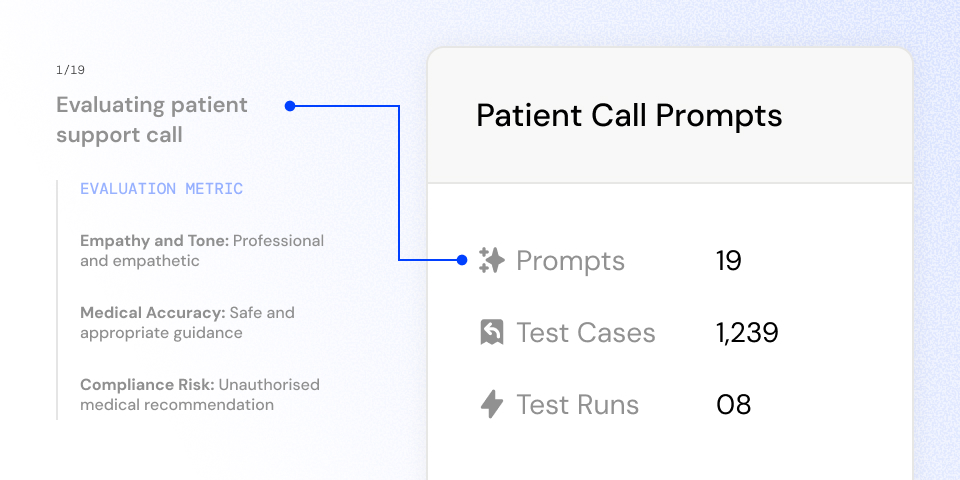

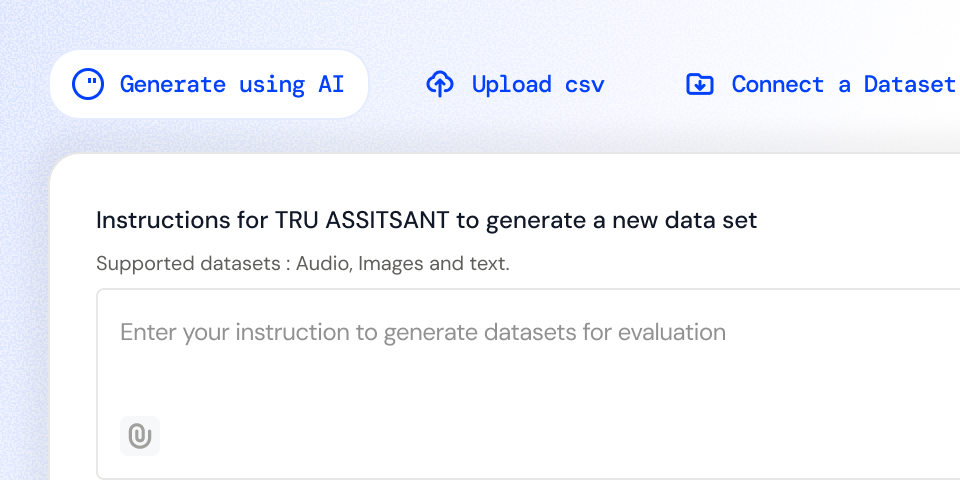

Design and run tailored test cases to match your business needs. Flexible options for every workflow.

Receive clear, automated reports after each test. Stay informed and make data-driven decisions quickly.

Generate human-readable insights behind every model score